Many of you are using PF_RING and TNAPI for accelerating packet capture performance, but have probably not tested the code for a while. In the past month we have tuned PF_RING performance and squeezed some extra packets captured implementing the quick_mode in PF_RING. When you do insmod pf_ring.ko quick_mode=1, PF_RING optimizes its operations for multi-queue RX adapters and applications capturing traffic from several RX queues simultaneously. The idea behind quick_mode is that people should use it whenever they are interested just in maximum packet capture performance, and do not need PF_RING features such as packet filtering and plugins.

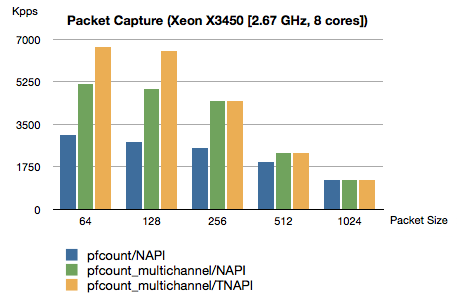

We tested PF_RING vs TNAPI on a low-end server equipped with an entry-level Xeon processor, so that we can test the minimum packet capture performance you can expect. The test result is shown below and it basically says that:

- With packets of 512 bytes or more, TNAPI and PF_RING-over-NAPI show a similar performance, and they can operate at wire rate as they capture all available packets.

- With 64 bytes packets and a multi-threaded/multi-queue poller application such as pfcount_multichannel (you can find all apps in PF_RING/userland/examples) you can capture 5.1 million packets/sec over NAPI, and 6.7 million packets/sec over TNAPI.

- This means that with small packets TNAPI offers a +30% performance increase with respect to NAPI.

As in our experience 10 Gbit links do not have in average more than 2 million packets/sec, this test shows that the performance offered by PF_RING in combination with TNAPI (and in a way even NAPI) is more than adequate as can capture more that 6.5 million packets/sec.

Is this enough for you? If not, we’ll soon introduce a 10 Gbit DNA driver developed with Silicom, so you can see to which extents we pushed packet capture limits.

Stay tuned.

Test Environment

- Supermicro server equipped with a single Xeon X3450 running at 2.67 GHz (4 real cores with HT, 8 cores in total)

- PF_RING 4.6.4 and ixgbe driver (SVN release 4601)

- Intel 82598/82599-based adapter

- insmod pf_ring.ko transparent_mode=2 quick_mode=1

- Interrupts balanced as follows: PF_RING/drivers/intel/ixgbe/ixgbe-3.1.15-FlowDirector-NoTNAPI/scripts/set_irq_affinity.sh eth4

- pfcount -i eth4 -w 5000 -b 99 -l <packet size (depends on the test)>

- pfcount_multichannel -i eth4 -w 5000 -b 99 -l 64<packet size (depends on the test)>