PF_RING ZC (Zero Copy) is a flexible packet processing framework that allows you to achieve 100+ Gbit line rate packet processing (both RX and TX) at any packet size. It implements zero copy operations including patterns for inter-process and inter-VM (KVM) communications.

It features a clean and flexible API that implement simple building blocks (queue, worker and pool) that can be used from threads, applications and Virtual Machines to implement zero-copy packet processing patterns.

PF_RING ZC drivers can be used both in kernel or bypass mode. Once installed, the drivers operate as standard Linux drivers where you can do normal networking (e.g. ping or SSH). When used from PF_RING they are quicker than vanilla drivers, as they interact directly with it. When opening the same interface in zero-copy mode (e.g. pfcount -i zc:eth1) the device becomes unavailable to standard networking as it is accessed in kernel bypass mode. Once the application accessing the device is closed, standard networking activities can take place again.

at a glance

Key Features

- User-space zero-copy drivers for extreme packet capture/transmission speed as the NIC NPU (Network Process Unit) is pushing packets to the application without any kernel intervention

- Send and receive packets up to 100 Gbit wire-speed any packet size

- Library for distributing packets in zero-copy across threads, applications, Virtual Machines

- Zero-copy support for all Intel adapters, NVIDIA (Mellanox) ConnectX and BlueField, Napatech, Silicom FPGA (Fiberblaze)

- Flexible zero-copy packet processing API to implement pipelines, map-reduce and many other processing pattern

- Hardware-based packet filtering on (selected) commodity adapters including Intel, NVIDIA and FPGA adapters

- nBPF support to convert human-readabel BPF-like filters into hardware filters

- Hardware timestamps with nanosecond precision (selected adapters)

Ideal for Every Environment

Use Cases

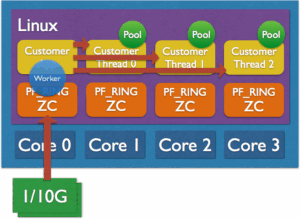

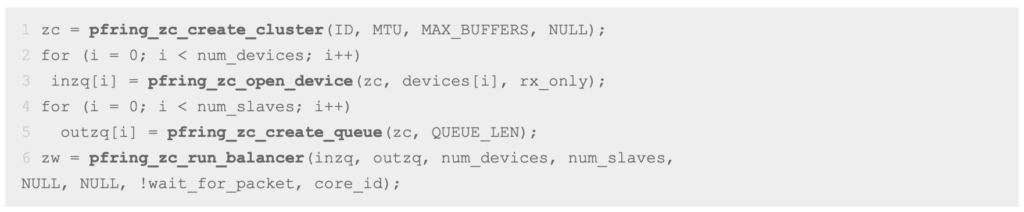

Custom Zero-Copy Load-Balancing

PF_RING ZC comes with a simple API able to create a complex application in a few lines of code. PF_RING includes a sample application zbalance_ipc implementing load-balancing using this API.

The following example shows how to create an aggregator + balancer application in 6 lines of code.

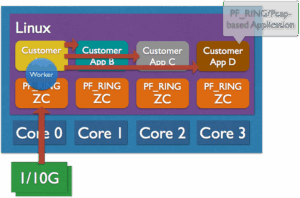

Zero-Copy Traffic Fan-Out

PF_RING ZC can perform zero copy operations across threads, applications and VMs. It is possible to balance in zero-copy packets across applications or implement packet fanout, which is merely sending the same packet (by reference) to multiple consumer applications, all in zero-copy, at line rate.

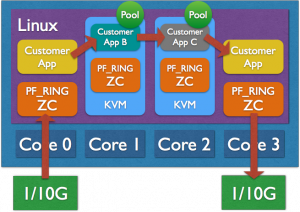

Zero Copy Operations to Virtual Machines

PF_RING ZC allows you to forward (both RX and TX) packets in zero-copy to KVM Virtual Machines without using techniques such as PCIe passthrough. Thanks to the dynamic creation of ZC devices on VMs, you can capture and send traffic in zero-copy from VMs without having to patch the KVM hypervisor code, or start KVM after your ZC devices have been created. In essence it is possible to capture traffic at line rate on KVM VMs using the same command you would use on a physical host, without changing a single line of code.

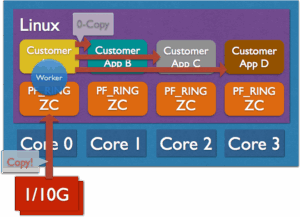

Implement Zero-Copy Processing on Non Zero-Copy Devices

In PF_RING ZC you can use the zero-copy framework even with non-PF_RING aware drivers. This means that you can dispatch, process, originate, and inject packets into the zero-copy framework even though they have not been originated from ZC devices.

Once the packet has been copied (one-copy) to the ZC world, from then onwards the packet will always be processed in zero-copy during all his lifetime. For instance the zbalance_ipc demo application can read packet in 1-copy mode from a non-PF_RING aware device (e.g. a WiFI-device or a Broadcom NIC) and send them inside ZC for performing zero-copy operations with them.

Kernel Bypass and IP Stack Packet Injection

Contrary to other kernel-bypass technologies, with PF_RING ZC you can decide at any time what packets received in kernel-bypass you want to inject into the standard Linux IP stack. PF_RING comes with an IP stack packet injection module called stack that allows you to select what packets received in kernel-bypass need to be injected to the standard IP stack. All you need to do is to open the device “stack:eth1” and send your packets for pushing them to the IP stack as if they were received from eth1.

Specifications

Tech Specs

- Linux

- C API

x86 64-bit platforms are supported (Intel and AMD)

- Ethernet

- IPv4/IPv6

- TCP/UDP/ICMP/Any

- GTP/GRE/MPLS/VXLAN/PPP

models

Choose Your Model

Did you already install the software?

Select the model. Different models unlock different adapters and capacity. Check the comparison table.

Intel/NVIDIA 10-100 Gbit

- Supported Intel families: ixgbe, i40e, ice

- Supported NVIDIA/Mellanox models: ConnectX 5/6

- Hardware timestamps (on selected models)

- Hardware filters (on selected models)

- 10-100 Gbit

Napatech/Silicom FPGA

- Supported adapters: Napatech, Silicom/Fiberblaze

- Hardware filtering

- Hardware timestamps (nanoseconds)

- PCAP segment mode

- Flow offload (on Napatech)

- 10-100 Gbit