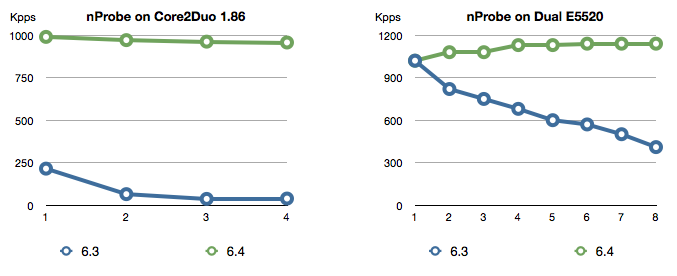

Release 6.3 of nprobe targeted IPFIX compatibility. In release 6.4.x (just introduced) the main focus has been on scalability and performance. Until 6.3, the nProbe architecture was not really exploiting multicore systems, due to heritage of previous versions. With this release nProbe reaches a new level as you can see from the graph below (traffic was generated using an IXIA 400, flows last 5 seconds, and are emitted in V5 format, PF_RING 4.6.3, Intel e1000e capture adapter with PF_RING-aware driver [no TNAPI]). Both graphs depict the sustained throughput rate (Y axis, expressed in Kpps, thus packets-per-second that is much more challenging than volume, or Gbps) with no packet loss, when compared the number of threads (X axis) used in nProbe (-O <number of threads>).

As you can see the new version on a dual core machine performs much better than the previous version. Nevertheless the main advantage is that with this version you can process at least 1 Mpps with no loss, this on both machines. If you use a PF_RING-aware driver on top of a multi-queue card, you can scale up to 10 Gbit (or multi-gbit) netflow monitoring by:

- binding a nProbe (in single thread mode) to each core

- binding a each nProbe to a RX queue

Guess what you can achieve when using nProbe on top of TNAPI… Of course you can choose to run nprobe on your server or get a turn-key nBox appliance that includes nProbe (you can look at this video created by our Plixer colleagues for more information about the nBox).