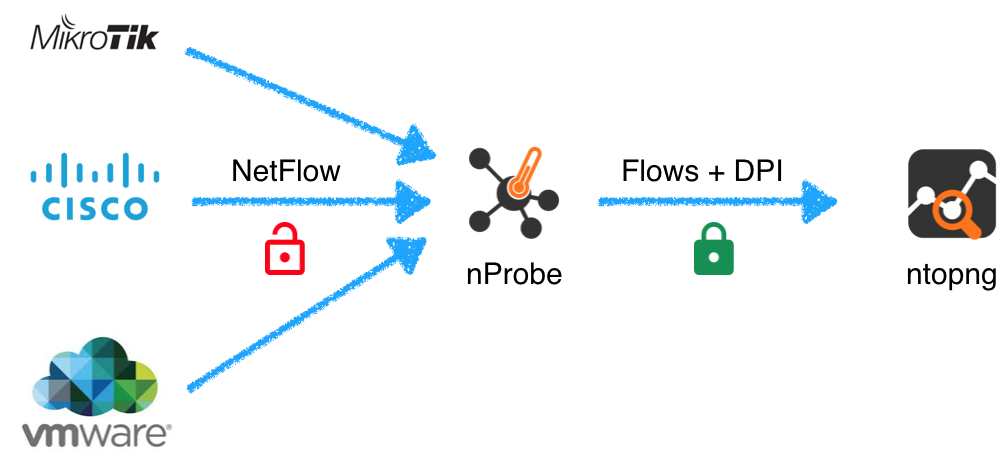

Most people use nProbe and ntopng to collect flows using an architecture similar to the one below

where nprobe and ntopng are started as follows:

nprobe -3 <collector port> -i none -n none —zmq "tcp://*:1234" --zmq-encryption-key <pub key>ntopng -i tcp://nprobe_host:1234 --zmq-encryption-key <pub key>

In this case ntopng communicates with nProbe over an encrypted channel and flows are sent in a compact binary format for maximum performance. If you do not need nProbe to cache and aggregate flows, you can also add --collector-passthrough on the nProbe side to further increase flow collection.

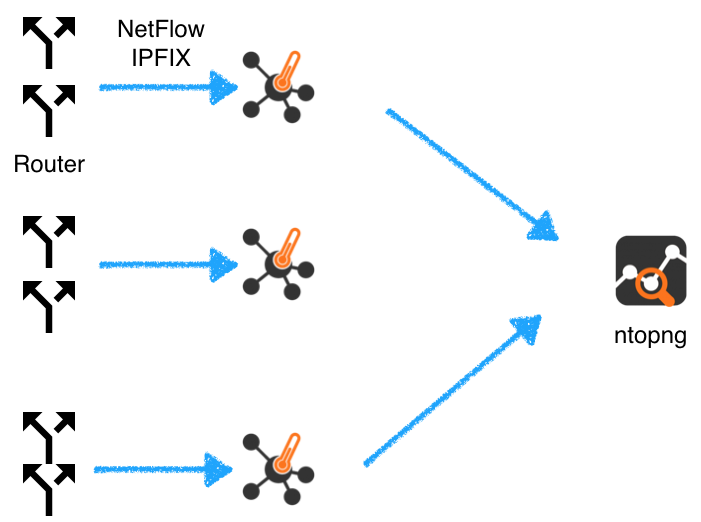

This setup is good as long as you have a reasonable amount of flows to collect according to the system performance. In case you have too many flows to collect (e.g. you have many routers sending nProbe, or a big router sending many flows per second) this setup does not no scale up because it does not take advantage of multicore architectures. The solution is to

- Distribute the load across multiple (instead of one) nProbe instance.

- On ntopng collect flows over multiple ZMQ interfaces and eventually aggregate them with a view interface.

In essence you need to adopt an architecture as follows

where you start nProbe/ntopng as follows:

-

nprobe -i none -n none -3 2055 —zmq tcp://127.0.0.1:1234 -

nprobe -i none -n none -3 2056 —zmq tcp://127.0.0.1:1235 -

nprobe -i none -n none -3 2057 —zmq tcp://127.0.0.1:1236 -

ntopng -i tcp://127.0.0.1:1234 -i tcp://127.0.0.1:1235 -i tcp://127.0.0.1:1236 -i view:all

In this case each nProbe instance will process flows concurrently and the same will happen on ntopng. All you need to do is to try to share the ingress load across all the nProbe instances so that each instance receives a similar amount of flows and the workload is shared across them.

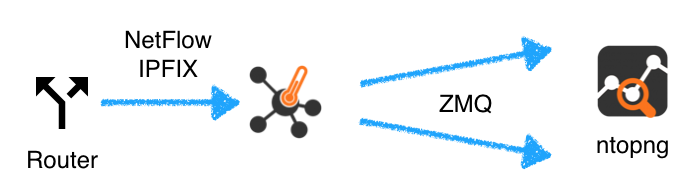

Instead, in case you have a single router exporting flows towards the same nProbe instance, the above trick cannot be used so we need to load balance differently. This can be achieved as follows

where you configure nProbe this way:

-

nprobe -i none -n none -3 2055 --collector-passthrough —zmq tcp://127.0.0.1:1234 —zmq tcp://127.0.0.1:1235 —zmq tcp://127.0.0.1:1236 -

ntopng -i tcp://127.0.0.1:1234 -i tcp://127.0.0.1:1235 -i tcp://127.0.0.1:1236 -i view:all

In this case nProbe will load balance collected flows across multiple egress ZMQ and ntopng will collect flows concurrently as every ZMQ interface runs on a separate thread.

Please note that:

- The

--collector-passthroughis optional but it can reduce the load on nProbe, that is desirable if you have a big router exporting flows towards the same instance. - The above solution can also be applied to packet processing (

nprobe -i ethX) and not just to flow collection (in this case--collector-passthroughis not necessary). Namely the more you spread the load across interfaces, the more you exploit multicore systems and your system can scale up.

Enjoy !