This is to introduce nProbe 9.0 stable release whose the two main features are traffic behaviour analysis and high speed flow collection.

Traffic Behaviour Analysis

When in 2002 nProbe™ development started, the idea was to create a drop-in replacement for physical probes present in routers. Later the advent of IPFIX pushed the monitoring community towards standardisation of flow exports, and promoted interoperability across probes and collectors. Then the market started to ask solutions for visibility (and not just traffic accounting), and we developed nDPI™ for going beyond port and protocols and tell exactly the application protocol being used. In the past months, in addition to further improving DPI, we have decided to got into a new land of traffic behaviour analysis. The recent changes in nDPI are just a part of the work we’re carrying on and that will be completed in the next few months. In essence the idea is to use tools such as nProbe to fingerprint traffic not just in terms of protocol, but also in terms of behaviour, i.e. how a certain host behaves when using a specific protocol when talking with a remote site. The reasons are manyfold, including the ability to interpret encrypted traffic that is no longer inspectable and thus that leaves network analysts blind. This is the direction we’ve taken and that will be consolidated in the next nProbe release. In essence nProbe has to be able to answer questions like: my surveillance camera is working normally or some changes in behaviour happened?

Behaviour analysis in nProbe is based on time and payload packet length bins, that are basically containers of values belonging to a specific range.

- %SEQ_PLEN

it classifies packets payload (past 3WH for TCP) for the first 256 flow packets into 6 bins: <= 64, 65-128, 129-256, 257-512, 513-1024, >= 1025. Example if your payload bytes are 100,400,256,1064,1400 the SEQ_PLEN will be 0,1,1,1,0,2. - %SEQ_TDIFF

it classifies packet IAT (inter-arrival time) (past 3WH for TCP) for the first 256 flow packets into 6 bins: <= 1 ms, 1-5 ms, 5-10 ms, 10-50 ms, 50-100 ms, 100+ ms. - %ENTROPY_CLIENT_BYTES and %ENTROPY_SERVER_BYTES

Byte entropy on packet payload for both directions. A high value means that the bytes are more spread (high variance) with respect to low values where data is more predictable. Many protocols have typical values: DNS 4.2, TLS 7.7, NetFlow 4, SkypeCall 6.

These values are the first pillar for behaviour analysis. For instance you can easily detect a scan or hearthbleed time/length bins, or see if a flow misbehaves when their distribution changes with respect to typical values. If you apply this to host level (ntopng already does it) you can complete this analysis in a simple yet effective way.

High Speed Flow Collection

Traditionally nProbe has focused mostly on the probe side. Over the years, with NetFlow/IPFIX being more pervasive in network devices, we have decided to enhance the flow collection capabilities of nProbe. This is in particular since people started to use ntopng to visualise data (10 years ago most people used nProbe to export flows towards commercial collectors and not to self-visualise them with ntop tools). In order to increase the typical nProbe collection speed, we have first implemented –disable-cache for collected flows to disable the nProbe caching and thus emit flows quicker. In this release we introduce a new option named –collector-passthrough that is basically a 1:1 flow conversion from collected flows to ntopng (so the idea of this flag is to use it with ntopng for increasing the collection speed. To further improve the speed we are now using the new nDPI data serialiser that can write data in both JSON (legacy) and binary format that should be used when performance matters. Note that with –collector-passthrough you do not need to specify –disable-cache as in passthrough mode the cache is totally bypassed and thus it has not effect.

The following table reports test outcome on a Intel(R) Xeon(R) CPU E3-1230 v3 @ 3.30GHz, binding nProbe to a single core (yes. if you have 4 cores you can multiply the numbers below by a factor close to 4).

| nProbe Configuration

(single core -g) |

ZMQ Flow Format | ZMQ Flow Egress Rate Towards ntopng |

Improvement (%) |

|---|---|---|---|

|

-g 1 –zmq-format=j –disable-cache |

JSON | 33k Flows/sec | (same as nProbe 8.6) |

|

-g 1 –zmq-format=t –disable-cache |

binary | 73k Flows/sec | +220 % |

|

-g 1 –zmq-format=j –collector-passthrough |

JSON | 58k Flows/sec | +175 % |

|

-g 1 –zmq-format=t –collector-passthrough |

binary | 220k Flows/sec | +666 % |

Bottom line. With nProbe 9.0 you have a collection improvement with respect to the old version of 6.6x. Not bad! Note that for binary mode you need ntopng version 3.9 or better.

Flow Collection Across Firewalls

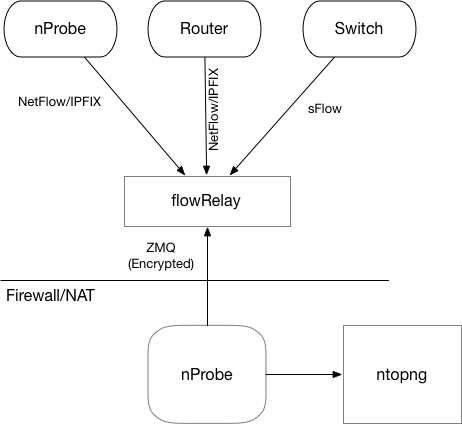

Firewalls and security devices are compulsory devices in every modern network in order to enforce security policies. However if you have a router/switch/probe outside of your firewall (e.g. on a DMZ or public cloud) and you want to collect NetFlow/sFlow/IPFIX using nprobe sitting behind a firewall, this can be a problem. Another problem that sometime people has to face with, is the need to collect flows over the Internet: this is because flows are sent in clear text and transit on a public network is never a good idea. For this reason we have developed a free, nProbe companion tool, named flowRelay that solves this problem. This is how flowRelay works.

$ flowRelay -h

Welcome to flowRelay v1.0: sFlow/NetFlow/IPFIX flow relay

Copyright 2019-20 ntop.org

flowRelay [-v] [-h] -z <ZMQ enpoint>] -c <port>

-z <ZMQ enpoint> | Where to connect to or accept connections from.

| Examples:

| -z tcp://*:5556c [collector mode]

-c <port> | Flow collection port

-k <ZMQ key> | ZMQ encryption public key

-v | Verbose

-h | Help

In essence nProbe connects to flowRelay (the other way round won’t work due to firewall/NAT) that will then forward flows to nProbe via the encrypted ZMQ channel (you can read more here). flowRelay will not dissect the flow packets but it will check if the received traffic is really NetFlow/sFlow/IPFIX, this to avoid sending nProbe data that is not appropriate. Of course for maximum security you can set an ACL that allows only selected probes/routers/switches to send data to the flowRelay.

Enjoy!

Changelog

New Features

- Added flowRelay tool to collect flows through a firewall.

- Data serialisation based on nDPI: data can now be serialised in either JSON or binary format.

- Implemented %SEQ_PLEN, %SEQ_TDIFF, %ENTROPY_CLIENT_BYTES and %ENTROPY_SERVER_BYTES.

- Implemented –dump-bad-packets in collector mode for dumping invalid collected packets

- Improved GTPv2 plugin with dissection of many new fields.

- Implemented GTP v1/v2 per IMSI and APN aggregation via –imsi-apn-aggregation.

- Added HPERM and TZSP encapsulation support.

- Added %SSL_CIPHER %SSL_UNSAFE_CIPHER %SSL_VERSION.

- Implemented JA3 support via %JA3C_HASH and %JA3S_HASH.

- Implemented %SRC_HOST_NAME and %DST_HOST_NAME for host symbolic name export (if known).

- Implemented IP-in-IP support.

- Various Diameter plugin improvements.

- Implemented –collector-passthrough.

- Added support for IXIA packet trailer.

- Extended statistics reported via ZMQ so ntopng can better monitor the nProbe status.

- Implemented ZMQ encryption.

- Various Kafka export improvements (export plugin).

- Added support for the latest ElasticSearch format (export plugin).

- Implemented flow collection drop counters on UDP socket (Linux only).

Fixes

- Fixed NetFlow Lite processing.

- Implemented various checks for discarding corrupted packets that caused nProbe to crash.

- Fixed flow upscale calculation (e.g. with sFlow traffic).

- Application latency is now computed properly for some specific TCP flows with retransmissions.

- Fixed DNS dissection over TCP.

- Improvements in Out-of-Order and retransmission accounting.

- Fixes for %EXPORTER_IPV4_ADDRESS with sFlow.

- Support for the new GeoIP database format.

- Extended the @NTOPNG@ template macro with new fields supported by ntopng.

- Fixed bug in RTP plugin that was leading in some cases nprobe to crash.

- Various minor fixes in the nProbe engine.